I recently gave a presentation to the NYC Cassandra meetup about how we use Cassandra at Junction Networks, and even data distribution across geographically dispersed datacenters using Cassandra and NetworkTopologyStrategy. This post is a overview of the presentation material. Slides from the presentation are available as a PDF here.

I am assuming the reader has some level of familiarity with what Cassandra. For those who don't know what Cassandra is, it is a distributed multi-layer key value store. Similar to a distributed hash table, but it has many more features and complexities. For more information about Cassandra generally, check out http://cassandra.apache.org/.

Cassandra 101

Every time a Cassandra node receives a request to store data it consistently hashes the data, with md5 when using RandomPartitioner, to get a “token” value. The token range for data is 0 – 2^127.

Every node in a Cassandra cluster, or “ring”, is given an initial token. This initial token defines the end of the range a node is responsible for. Throughout the rest of this discussion I am going to use a hypothetical token range of 0-100 to make it simpler to demonstrate token range ownership and data placement.

Data placement

Cassandra is not “fixed” in the way that it places data around the ring. It uses two components, Snitches and Strategies, to determine which nodes will receive copies of data. Snitches define proximity of nodes within the ring. Strategies use the information Snitches provide them about node proximity along with an implemented algorithm to collect nodes that will receive writes.

Example: SimpleSnitch & SimpleStrategy

SimpleSnitch literally has no locality information about nodes, it just returns a list of all the nodes in a ring. SimpleStrategy will attempt to start writing data to the first node whose token is larger than the tokens data. If there are no nodes whose token is larger than the data's token, it will start at the node with the smallest token.

Lets say we have four nodes in our Cassandra ring with a token range of 0-100 and our intial tokens are assigned as follows: d->0, a->25, b->50, c->75. If we try to place data that has a token of 19, SimpleStrategy will ask for the list of nodes from SimpleSnitch, then it will write to the first node whose token is larger, which in this case is node a. If we had a replication factor of 2 (two copies of data should be written), SimpleStrategy will simply continue gathering the next highest token value node. So our second copy would go to node b.

This is one of the most common types of distribution methods that people implement with Cassandra: even token distribution between nodes so that each owns 25% of the data.

Smarter Snitches and Strategies

Cassandra has another Snitch called PropertyFileSnitch which maintains much more information about nodes within the ring. PropertyFileSnitch maintains a mapping of node, datacenter, and rack so that we can determine, for any node, what data center it is in, and what rack within that datacenter it is in. This information is statically defined in cassandra-topology.properties.

There is also a Strategy that is made to use the information from a PropertyFileSnitch called NetworkTopologyStrategy (NTS). The NTS algorithm is implemented as follows:

- Get Datacenters from strategy options: {DC0:1,DC1:1}

- For each data center entry

- Get replication factor

- Get a list of all endpoints for this datacenter from the snitch

- Create a ringIterator from the datacenter endpoints list and Collect endpoints to write to – only select an endpoint from the list for any given rack once (distribute across racks)

- If replication factor has not been met, continue to collect endpoints from the list, allowing racks that already contain an endpoint in the write list

- If our replication factor is not equal to our list of endpoints, throw an error because there are not enough nodes in the data center to meet the replication factor

There is a lot of important stuff going on here (see the presentation slides for more in depth coverage of what is going on internally), but to keep it brief, the key difference is that instead of iterating over an entire set of nodes in the ring, NTS creates an iterator for EACH datacenter and places writes discretely for each. The result is that NTS basically breaks each datacenter into it's own logical ring when it places writes.

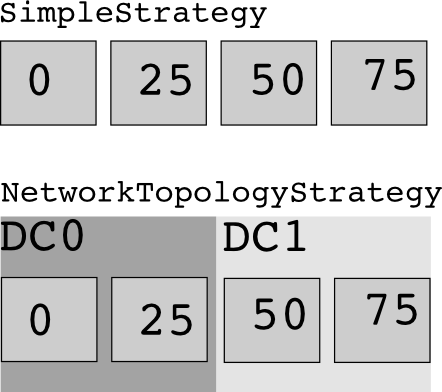

Here is a diagram of how each SimpleStrategy and NTS view the set of available nodes when trying to place data.

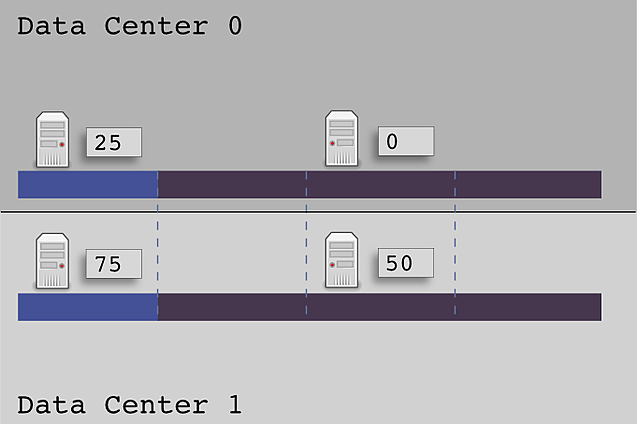

Because NTS has a segmented view of each data center, using the same evenly distributed tokens will cause a unbalanced placement of data within each data center. For DC0, anything from 1-25 will be placed on the node with initial token 25, while 26-100, and 0, will be placed on the node with initial token 0.

Here is a diagram showing the token range ownership when using NTS with even initial tokens.

Clearly this is not an acceptable method of token selection since two of our nodes will each contain 75% of the data. So what should we do? I propose that we do the same thing that NTS is doing, and look at each data center as it's own logical ring when we are assigning tokens.

Mirrored Offset Tokens

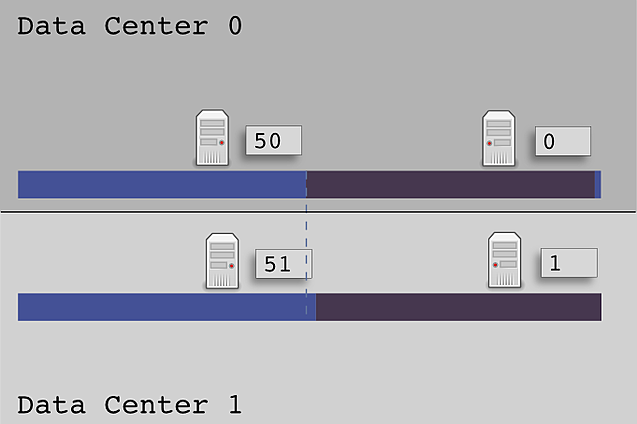

If we choose even tokens for each data center, in our example token range of 0-100, we would end up with tokens 0 and 50 for each of our nodes. We can not assign the exact same token to more than one node though, so we must offset tokens that are in conflict. For the first data center assign 0 and 50, for the second data center assign 1 and 51, for the third data center, 2 and 52, etc.

Here is a diagram showing the token range ownership when using NTS with mirrored offset tokens.

Using this initial token assignment each data center has an equal token distribution amongst it's nodes. If you have more nodes in one data center, that is okay too – simply calculate the tokens for that datacenter as if it were it's own ring and if there are any direct token conflicts, offset the tokens. This method will guarantee that each datacenter will have even load distributed across all the nodes within the datacenter.