Hyperscale data centers are popping up all over the globe. They’re not overly new, but they’re much more in the spotlight due to accelerated cloud adoption. Google, AWS, and Microsoft have built several of these centers across continents. Even Alibaba is getting into the game outside of China. Same with Facebook.

IT infrastructure is built around scalability, so the evolution from the old office data center or even standalone data centers to hyperscale data centers is essentially scalability on steroids. Which makes sense when you think about accelerated cloud adoption (also on steroids since March 2020) and the truly unfathomable amount of data stored and sent around the globe. We don’t mean to be hyperbolic either—at a certain point numbers are actually too large for the human brain to compute. Think about how the IoT continues to expand. More devices equals more data. If data grows exponentially, as it is doing, even massive data centers can’t cut it anymore. Sure, you could expand to more data centers, but at the scale giant companies like Facebook and AWS need, they’d take over every inch of land.

Data Center Versus Hyperscale Data Center

There’s technically no official demarcation for regular versus hyperscale data centers, and specifics vary from source to source. But the general consensus as of publication is that once a campus exceeds 5,000 servers and 10,000 square feet, it shifts to the hyperscale category. Size isn’t the only qualification, however. We think ZDNet sums up the general idea best:

“A hyperscale data center is less like a warehouse and more like a distribution hub, or what the retail side of Amazon would call a ‘fulfillment center.’ Although today these facilities are very large, and are operated by very large service providers, hyperscale is actually not about largeness, but rather scalability.”

Hyperscale, as the name suggests, isn’t just about more space and additional servers, but scalability as well. And efficiency. Its whole design focuses on computing more data more efficiently—i.e., with less. Hyperscale success comes down to maximum efficiency through scaling out, not scaling up.

Quick Explainer:

Scaling up: The version of scaling with which most of us are familiar. It means adding power and/or functionality to machines already in use.

Scaling out: Adding new machines to the network.

Designed For Maximum Efficiency

This means everything from the physical layout to climate control to the insane amount of power and Internet speed required. You may remember a point in our cloud resiliency blog noting the importance of a whole system overview. In that case it’s to scan the whole system for potential downtime triggers. For hyperscale data center servers, it’s to manage power distribution and temperature. Most power in any data center, besides bandwidth, goes to climate control. One overheated server can spell danger for the neighboring machine. The hyperscale setup plans strategic distribution of workloads and power, as well as automatic temperature monitoring so that no one server or section gets maxed out unnoticed and unhandled.

We could mention another of our blogs here, on privacy by design. Again the same concept discussed there applies to hyperscale data centers. With one, you integrate security into IT infrastructure from initial planning. Hyperscale data centers do the same with efficiency. Without clearly efficient setup throughout the design process, the center can’t fulfill its purpose.

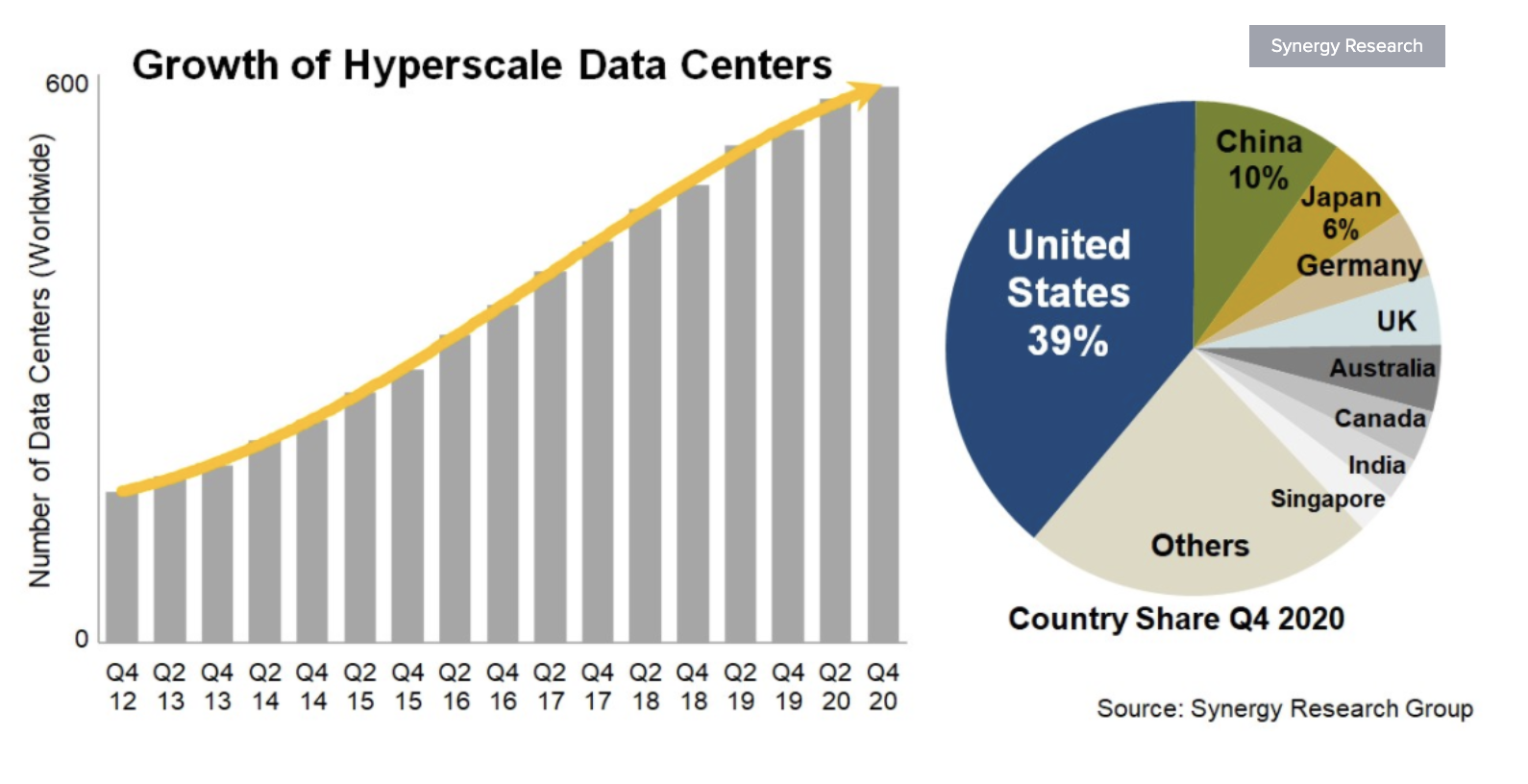

Like we mentioned above, hyperscale data centers aren’t new. There were about 200 back in 2015, and more than 600 by 2021 with more on the way. Three of the five largest are in China, with the biggest exceeding 10 million square feet. The other two on that list are in the United States. Fun fact: Both of those run on 100% renewable energy (up to a point, but 500+ megawatts is a lot). Expect to hear a lot more about these campuses going forward.